Welcome to Stambia MDM.

This guide contains information about using the product to design and develop an MDM project.

Preface

Audience

| If you want to learn about MDM or discover Stambia MDM, you can watch our tutorials. |

| The Stambia MDM Documentation Library, including the development, administration and installation guides is available online. |

Document Conventions

This document uses the following formatting conventions:

| Convention | Meaning |

|---|---|

boldface |

Boldface type indicates graphical user interface elements associated with an action, or a product specific term or concept. |

italic |

Italic type indicates special emphasis or placeholder variable that you need to provide. |

|

Monospace type indicates code example, text or commands that you enter. |

Other Stambia Resources

In addition to the product manuals, Stambia provides other resources available on its web site: http://www.stambia.com.

Obtaining Help

There are many ways to access the Stambia Technical Support. You can call or email our global Technical Support Center (support@stambia.com). For more information, see http://www.stambia.com.

Feedback

We welcome your comments and suggestions on the quality and usefulness

of this documentation.

If you find any error or have any suggestion for improvement, please

mail support@stambia.com and indicate the title of the documentation

along with the chapter, section, and page number, if available. Please

let us know if you want a reply.

Overview

This guide contains information about using the product to design and develop an MDM project.

Using this guide, you will learn how to:

-

Use the Stambia MDM Workbench to design and develop an MDM project.

-

Design the logical model representing your business entities.

-

Design the integration process for certifying golden data from data published from source systems.

-

Deploy the logical models and run the integration processes developed in Stambia MDM Workbench.

| If you want to try Stambia MDM, you can use our demonstration environment and getting started guide: Getting Started with Stambia MDM. |

Introduction to Stambia MDM

Stambia MDM is designed to support any kind of Enterprise Master Data Management initiative. It brings an extreme flexibility for defining and implementing master data models and releasing them to production. The platform can be used as the target deployment point for all master data of your enterprise or in conjunction with existing data hubs to contribute to data transparency and quality with federated governance processes. Its powerful and intuitive environment covers all use cases for setting up a successful master data governance strategy.

Stambia MDM is based on a coherent set of features for all Master Data Management projects.

Unified User Interface

Data architects, business analysts, data stewards and business users all share the same point of entry in Stambia MDM through the single Stambia MDM Workbench user interface accessible through any type of browser. This interface uses rich perspectives to suit every user role and allows team collaboration. The advanced metadata management capabilities enhance the usability of this intuitive interface.

A Unique Modeling Framework

Stambia MDM includes a unique modeling framework that combines both ER and OO modeling with concepts such as inheritance or complex types. Data architects and business analysts use this environment to define semantically complete models that will serve as references for the enterprise. These models include the description of the business entities as well as the rules associated with them.

The Modeling Framework supports:

-

Logical Data Modeling: The expression of the logical data model semantics and rules by business analysts. This includes:

-

The target data model (Entities / Attributes / Relations / List of Values, etc.).

-

The rules for data quality (validations, referential integrity validation, list of values, etc.).

-

The functional decomposition of the model.

-

-

Integration Process Logical Design. This includes:

-

The definition of applications that publish data to the Hub (Publisher)

-

The rules to enrich and standardize data

-

The rules to match and to identify groups of similar records

-

The consolidation rules to produce the reference data (Golden Data)

-

-

Master Data Applications. This includes:

-

The definition of the data consumers via roles

-

The definition of the Privileges associated with these data consumers

-

Assembling the entities from the model into Business Objects

-

Defining views on these Business Objects

-

Assembling the Business Objects to create Application accessed by the business users and data stewards.

-

-

Master Data Workflows Design. This includes:

-

Creating human workflows for managing duplicates.

-

Creating human workflows for data entry or error fixing.

-

Data and Metadata Version Management

The innovative Stambia MDM technology supports an infinite number of

versions and branches of data and metadata. The collaborative process

between the different governance teams set the rules to close the

editions of metadata and data to keep full traceability or to run

multiple projects in parallel.

During the modeling phase, the data architect and business analysts

create their metadata definition until the first semantically data model

is finished. This model is then frozen in an edition and eventually

delivered to production. Subsequent iterations of the data model are

automatically opened. This allows Stambia MDM users

to replay the entire cycle of metadata definition. At any time, project

managers may choose to branch the developments in order to develop two

versions of the model in parallel.

Master Data in a Stambia MDM hub can also be managed with versions. Business processes specific to the enterprise set the rate at which data must be frozen in these versions. A consumer can access data in particular edition of the data and can see the data as it was at a particular moment in time. As a consequence, the company can develop a strategy for managing versions of data, for example during the introduction of new major versions of a model, for simulation projects and what-if analysis, or when launching new catalogs.

Golden Data Certification

Stambia MDM supports the integration of data from any source in batch mode, asynchronous or synchronous mode. The platform manages the lifecycle of data published in the hub. This data is pushed by publishers through existing middleware (ETL, SOA, EAI, Data Integration, etc.). The platform provides standards APIs such as SQL for ETLS, Java and Web Services integration for real-time publishing. The certification process follows a sequence of steps to produce and make available certified reference data (Golden Records). These steps apply all the logical integration rules defined in the modeling phase to certify at all times the integrity, quality and uniqueness of the reference data.

With its Open Plug-in Architecture Stambia MDM can delegate stages of the certification process to any component present in the information system infrastructure.

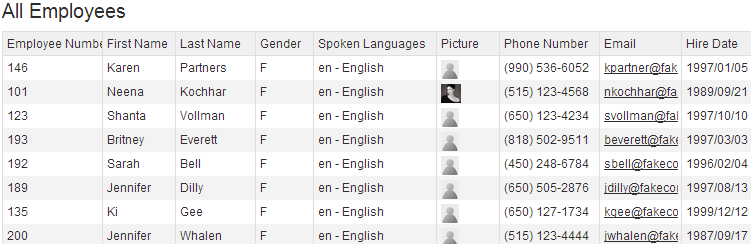

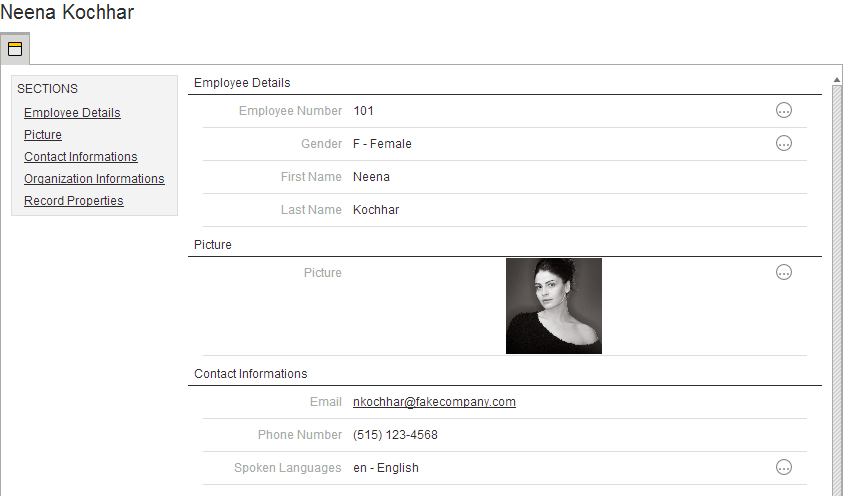

Generated Master Data Applications

Stambia MDM support Master Data Applications generation. These applications provide secured and filtered access to the golden and master data of the hub in business-friendly views in a Web 2.0 interface. Applications also support customized Human Workflows for duplicate management and data entry.

Golden Data Consumption

The certified data is stored in a relational database, which allows Stambia MDM to benefit from higher performance and scalability. The data is made available to consumers across multiple channels to allow a non-intrusive integration with the information system:

-

SQL access to reference data using JDBC or ODBC.

-

SOAP WebService for SOA: The platform generates Web Services to access the reference data from any SOA-enabled system.

Built-in Metrics & Dashboards

The Stambia MDM Pulse component enables business users to collect metrics and measure - with dashboards and KPIs - the health of their Stambia MDM Hub.

Introduction to the Stambia MDM Workbench

Logging In to the Stambia MDM Workbench

To access Stambia MDM Workbench, you need a URL, a user name and password that have been provided by your Stambia MDM administrator.

To log in to the Stambia MDM Workbench:

-

Open your web browser and connect to the URL provided to you by your administrator. For example

http://<host>:<port>/stambiamdm/where<host>and<port>represent the name and port of host running the Stambia MDM application. The Login Form is displayed. -

Enter your user name and password.

-

Click Log In. The Stambia MDM Welcome page opens.

-

Click the Stambia MDM Workbench button in the Design and Administration section. The Stambia MDM workbench opens on the Overview perspective.

Logging Out of the Stambia MDM Workbench

To log out of the Stambia MDM Workbench:

-

In the Stambia MDM Workbench menu, select File > Log Out.

Opening a Model Edition

A model edition is a version of a model. This edition can be in a closed

or open status. Only open editions can be edited.

Opening a model edition connects the workbench to this edition.

| Before opening a model edition, you have to create this model and its first edition. To create a new model and manage model editions, refer to the Models Management chapter. |

To open a model edition:

-

In the Stambia MDM Workbench menu, select File > Open Model Edition

-

In the Open a Model Edition dialog, expand the node for your model and then select a branch in the branch tree. The list of Model Editions for this branch is refreshed with the editions in this branch.

-

Select an edition in this list and then click Finish.

-

The Stambia MDM Workbench changes to the Model Edition perspective and displays the selected model edition.

Working with the Stambia MDM Workbench

The Stambia MDM Workbench is the graphical interface used by all Stambia MDM users. This user interface exposes information in

panels called Views and Editors .

A given layout of views and editors is called a Perspective .

Working with Perspectives

There are several perspectives in Stambia MDM Workbench:

-

Overview: this perspective is the landing page in the Workbench. It allows you to access all the other perspectives and the applications.

-

Model Design: this perspective is used to edit or view a model.

-

Data Locations: this perspective is used to create data locations as well as deploy and manage model and data editions deployed in these locations.

-

Model Administration: this perspective is used to manage model editions and branches.

-

Administration Console: this perspective is used to administer Stambia MDM and monitor run-time activity.

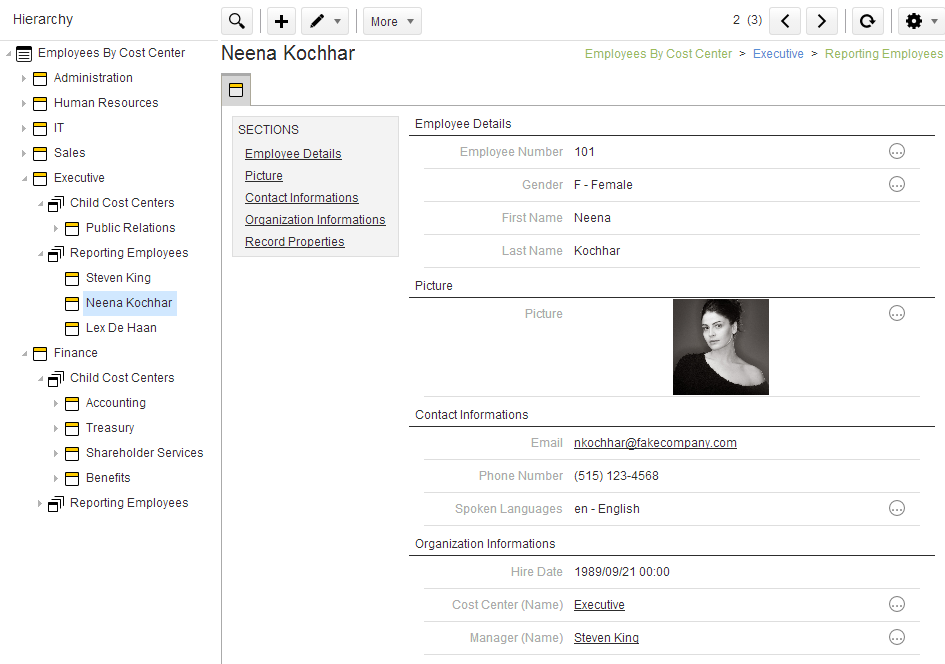

Working with Tree Views

When a perspective opens, a tree view showing the objects you can work

with in this view appears.

This view appears on the left hand-side of the screen.

In this tree view you can:

-

Expand and collapse nodes to access child objects.

-

Double-click a node to open the object’s editor.

-

Right-click a node to access all possible operations with this object.

Working with the Outline

Certain perspectives includes an Outline view. This view shows in tree

view form the object - and all its child objects - in the editor

currently opened.

This view appears on the left hand-side of the screen.

You can use the same expand, double-click, right-click actions in the

outline as in the tree view.

Working with Editors

An object currently being viewed or edited appears in an editor in the

central part of the screen.

You can have multiple editors opened at the same time, each editor

appearing with a different tab.

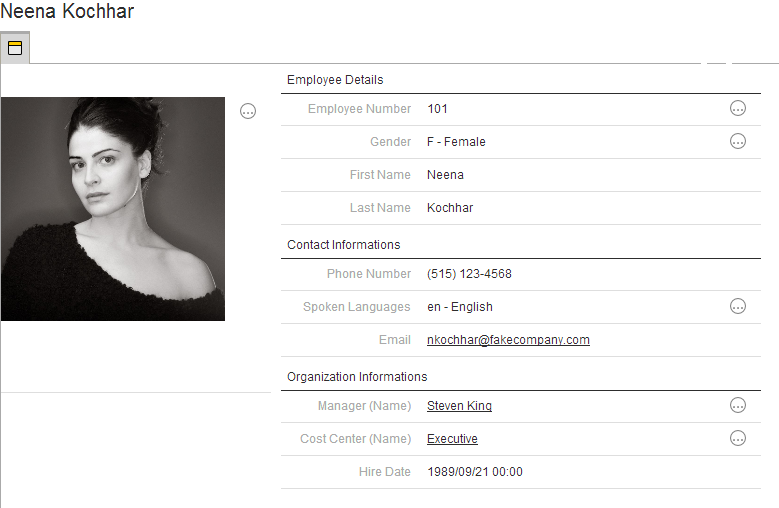

Editor Organization

Editors are organized as follows:

-

The editor has a local toolbar which is used for editor specific operations. For example, refreshing or saving the content of an editor is performed from the toolbar.

-

The editor has a breadcrumb that allows navigating up in the hierarchy of objects.

-

The editor has a sidebar which shows the various sections and child objects attached to an editor. You can click in this sidebar to jump to one of these sections.

-

Properties in the editors are organized into groups, which can be expanded or collapsed.

Saving an Editor

When the object in an editor is modified, the editor tab is displayed

with a star in the tab name. For example, Contact* indicates that the

content of the Contact editor has been modified and need to be saved.

To save an editor, either:

-

Click the Save button in the Workbench toolbar.

-

Use the CTRL+S key combination.

-

Use the File > Save option in the Workbench menu.

You can also use the File > Save All menu option or Save All toolbar button to save all modified editors.

Closing an Editor

To close an editor, either:

-

Click the Close (Cross) icon on the editor’s tab.

-

Use the File > Close option in the Workbench menu.

-

Use the Close option the editor’s context menu (right-click on the editor’s tab).

You can also use the File > Close All menu option or the Close All option the editor’s context menu (right-click on the editor’s tab) to close all the editors .

Accelerating Edition with CamelCase

In the editors and dialogs in Stambia MDM Workbench, the Auto Fill

checkbox accelerates object creation and edition.

When this option is checked and the object name is entered using the

CamelCase, the object Label as well as the Physical Name is

automatically generated.

For example, when creating an entity, if you type ProductPart in the

name, the label is automatically filled in with Product Part and the

physical name is set to PRODUCT_PART.

Duplicating Objects

The workbench support object duplication for Form Views, Tables Views, Search Forms, Business Objects, Business Object Views and Workflows.

To duplication an object:

-

Select the object to duplicate in the Model Edition tree view.

-

Right-click and then select Duplicate.

A copy of the object is created.

It is also possible to duplicate a group of objects. When duplicating a group of objects that reference one another, the references in the copied objects are moved to the copies of the original objects.

For example:

-

When copying a single business object view BOV1 that references a table view TV1, the copy of the business object View (BOV2) still references the table view TV1.

-

When copying a business object view BOV1 with a table view TV1 that it references (the group includes BOV1 and TV1), the copy of the business object view BOV2 references the copy of the table view BOV2.

To duplicate a group of objects:

-

Select the multiple objects to duplicate in in the Model Edition tree view. Press the CTRL key to enable multiple selection.

-

Right-click and then select Duplicate.

A copy of the group of object is created. The links are made if possible to the copied objects.

Working with Diagrams

Diagrams are used to design models and workflows.

The Model Diagram

The Model Diagram Editor shows a graphical representation of a portion

of the model or the entire model.

Using this diagram, you can create entities and references in a

graphical manner, and organize them as graphical shapes.

The diagram is organized in the following way:

-

The Diagram shows shapes representing entities and references.

-

The Toolbar allows you:

-

to zoom in and out in the diagram.

-

to select an automatic layout for the diagram and apply this layout by clicking the Auto Layout button.

-

to select the elements to show in the diagram (attributes, entities, labels or names, foreign attributes, etc)

-

-

The Palette provides a set of tools:

-

Select allows you to select, move and organize shapes in the diagram. This selection tool allows multiple selection (hold the Shift or CTRL keys).

-

Add Reference and Add Entity tools allow you to create objects.

-

Add Existing Entities allows you to create shapes for existing entities.

-

| After choosing a tool in the palette, the cursor changes. Click the Diagram to use the tool. Note that after using an Add… tool, the tool selection reverts to Select. |

For more information about the model diagrams, see the Diagrams section in the Logical Modeling chapter.

The Human Workflow Diagram

The human workflow diagram shows a graphical representation of the human

workflows.

Using this diagram, you can design tasks and transitions for a workflow.

The diagram is organized in the following way:

-

The Diagram shows shapes representing tasks (rectangles) and transitions (links), as well as the start and end events (round shapes).

-

The Toolbar allows you:

-

to zoom in and out in the diagram.

-

to select an automatic layout for the diagram and apply this layout by clicking the Auto Layout button.

-

to select the elements to show in the diagram (name/labels on the tasks and transitions).

-

to validate the workflow. The validation report shows the errors of the workflow.

-

-

The Palette provides a set of tools:

-

Select allows you to select, move and organize shapes in the diagram. This selection tool allows multiple selection (hold the Shift or CTRL keys).

-

Add Task and Add Transition tools allow you to modify the workflow.

-

-

The Properties view allows you to edit the properties of the task or transition selected in the diagram.

In this diagram, you can drag the transition arrows to change the source and target tasks of a transition.

For more information about the human workflow diagrams, see the Creating Human Workflows in the Working with Applications chapter.

Working with Other Views

Other views (for example: Progress, Validation Report) appear in certain perspectives. These views are perspective-dependent.

Workbench Preferences

User preferences are available to configure the workbench behavior. These preferences are stored in the repository and are specific to each user connecting to the workbench. The preferences are applied regardless of the client computer or browser used by the user to access the workbench.

Setting Preferences

Use the Window > Preferences dialog pages to set how you want the workbench to operate.

You can browse the Preferences dialog pages by looking through all the titles in the left pane or search a smaller set of titles by using the filter field at the top of the left pane. The results returned by the filter will match both Preference page titles and keywords such as general and stewardship.

The arrow controls in the upper-right of the right pane enable you to navigate through previously viewed pages. To return to a page after viewing several pages, click the drop-down arrow to display a list of your recently viewed preference pages.

The following preferences are available in the Preferences dialog:

-

Data Stewardship Preferences apply to the data stewardship applications:

-

Data Page Size: Size of a page displaying a list of records.

-

Show Hierarchy Navigation: Select this option to display the Hierarchical view of the records in the outline.

-

Show Lineage Navigation: Select this option to display the Lineage view of the records in the outline.

-

Predictable Navigation: Select to option to enable automated record sorting when opening a list of records. This option provides a predictable list of records for each access. This option is not recommended when accessing large data sets as sorting may be a time consuming operation.

-

List of Values Export Format: Format used when exporting attribute values with a list of values type.

-

List of Values Display Format: Format used when displaying attributes with a list of values type.

-

-

General Preferences:

-

Date Format: Format used to display the date values in the workbench. This format uses Java’s SimpleDataFormat patterns.

-

DateTime Format: Format used to display the date and time values in the workbench. This format uses Java’s SimpleDataFormat patterns.

-

Link Perspective to Active Editor: Select this option to automatically switch to the perspective related to an editor when selecting this editor.

-

Under the Data Stewardship preferences node, preference pages are listed for the data locations accessed by the user and for the entities under these data locations. These preference pages display no preferences and are used to reset the Filters, Sort and Columns selected by the user for the given entities, as well as the layout of the editors for these entities (the collapsed sections, for example). To reset the preferences on a preferences page, click the Restore Defaults button in this page.

Exporting and Importing User Preferences

Sharing preferences between users is performed using preferences import/export.

To export user preferences:

-

Select File > Export. The Export wizard opens.

-

Select Export Preferences in the Export Destination and then click Next.

-

Click the Download Preferences File link to download the preferences to your file system.

-

Click Finish to close the wizard.

To import user preferences:

-

Select File > Import. The Import wizard opens.

-

Select Import Preferences in the Import Source and then click Next.

-

Click the Open button to select an export file.

-

Click OK in the Import Preferences Dialog.

-

Click Finish to close the wizard.

Importing preferences replaces all the current user’s preferences by those stored in the preferences file.

Working with SemQL

| This section provides a quick introduction to the SemQL language. For a detailed description of this language with examples, refer to the Stambia MDM SemQL Reference Guide. |

SemQL is a language to express declarative rules in Stambia MDM Workbench. It is used in Enrichers, Matchers, Validations, Filters and Consolidators.

SemQL Language Characteristics

The SemQL Language has the following characteristics:

-

The syntax is close to the Oracle Database SQL language and most SemQL functions map to Oracle functions.

-

SemQL is not a query language: It does not support Joins, Sub-queries, Aggregation, in-line Views and Set Operators.

-

SemQL is converted on the fly and executed by the hub database.

-

SemQL uses Qualified Attribute Names instead of columns names. The code remains implementation-independent.

Qualified Attribute Names

A Qualified Attribute Name is the path to an attribute from the

current entity being processed.

This path not only allows accessing the attributes of the entity, but

also allows access to the attributes of the entities related to the

current entity.

Examples:

-

FirstName: Simple attribute of the current Employee entity. -

InputAddress.PostalCode: Definition Attribute (PostalCode ) of the InputAddress Complex Attribute used in the current Customer entity. -

CostCenter.CostCenterName: Current Employee entity references the CostCenter entity and this expression returns an employee’s cost center name -

CostCenter.ParentCostCenter.CostCenter: Same as above, but the name is the name of the cost center above in the hierarchy. Note that ParentCostCenter is the referenced role name in the reference definition. -

Record1.CustomerName: CustomerName of the first record being matched in a matcher process.Record1andRecord2are predefined qualifiers in the case of a matcher to represent the two records being matched.

SemQL Syntax

The SemQL Syntax is close to the Oracle Database SQL language for creating expressions , conditions and order by clauses.

-

Expressions contain functions and operators, and return a value of a given type.

-

Conditions are expression returning true or false. They support AND, OR, NOT, IN, IS NULL, LIKE, REGEXP_LIKE, Comparison operators (=, !=, >, >=, <, ⇐), etc.

-

Order By Clauses are used to sort records in a set of results using an arbitrary combination of Expressions sorted ascending or descending

In expressions, conditions and order by clauses, it is possible to use the SemQL functions. The list of SemQL functions is provided in the SemQL Editor

| SemQL is not a query language: SELECT, UPDATE or INSERT queries are not supported, as well as joins, sub-queries, aggregates, in-line views, set operators. |

SemQL Examples

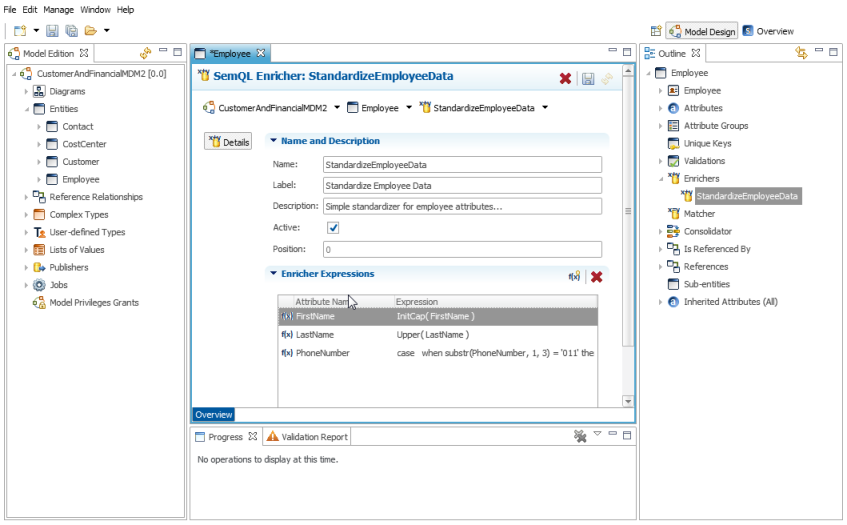

Enricher Expressions

-

FirstName:

InitCap(FirstName) -

Name:

InitCap(FirstName) || Upper(FirstName) -

City:

Replace(Upper(InputAddress.City),'CEDEX','')

In these examples, InitCap, Upper and Replace are SemQL functions.

The concatenate operator || is also a SemQL operator.

Validation Conditions

-

Checking the Customer’s InputAddress complex attribute validity:

InputAddress.Address is not null and ( InputAddress.PostalCode is not null or InputAddress.City is not null)

In this example, the IS NOT NULL, AND and OR SemQL operators are

used to build the condition.

Matcher

-

Binning Expression to grouping customers by their Country/PostalCode:

InputAddress.Country || InputAddress.PostalCode

-

Matching Condition: Matching two customer records by name, address and city name similarity:

SEM_EDIT_DISTANCE_SIMILARITY( Record1.CustomerName, Record2.CustomerName ) > 65 and SEM_EDIT_DISTANCE_SIMILARITY( Record1.InputAddress.Address, Record2.InputAddress.Address ) > 65 and SEM_EDIT_DISTANCE_SIMILARITY( Record1.InputAddress.City, Record2.InputAddress.City ) > 65

In this last example, SEM_EDIT_DISTANCE_SIMILARITY is a SemQL

function. Record1 and Record2 are predefined names for qualifying

the two record to match.

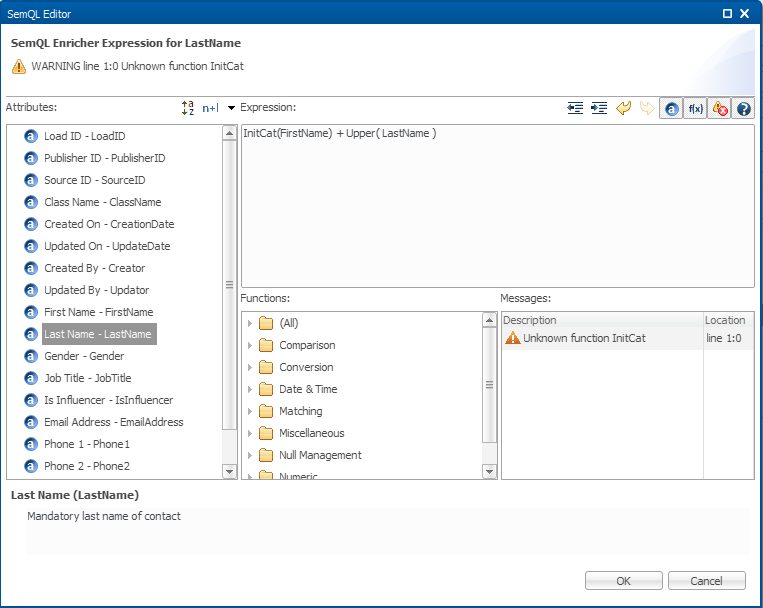

The SemQL Editor

The SemQL editor can be called from the workbench when a SemQL expression, condition or clause needs to be built.

This editor is organized as follows:

-

Attributes available for the expression appear in left panel. Double-click an attribute to add it to the expression.

-

Functions declared in SemQL appear in the left bottom panel, grouped in function groups. Double-click a function to add it to the expression. You can declare your own PL/SQL functions in the model so that they appear in the list of functions.

-

Messages appear in the right bottom panel, showing parsing errors and warnings.

-

Description for the selected function or attribute appear at the bottom of the editor.

-

The Toolbar allows to indent the code or hide/display the various panels of the editor and to undo/redo code edits.

Declaring PL/SQL Functions for SemQL

You can use in SemQL customized functions implemented using PL/SQL.

You must declare these functions in the model to have them appear in the list of functions.

| Functions that are not declared can still be used in SemQL, but will not be recognized by the SemQL parser and will cause validation warnings. |

To declare a PL/SQL function:

-

Right-click the PL/SQL Functions node in the Model Edition view and select Add PL/SQL Function. The Create New PL/SQL Function wizard opens.

-

In the Create New PL/SQL Function wizard, enter the following values:

-

Name: Name of the PL/SQL function. This name must exactly match the name of the function in Oracle. Note that if this function is part of a package, the function name must be prefixed by the package name.

-

Schema: The Oracle schema containing this function/package.

-

Categories: Enter or select the function categories of the SemQL Editor into which this function should appear.

-

-

Click Finish to close the wizard. The PL/SQL Function editor opens.

-

In the Description field, enter a detailed description for the function.

-

Click the Add Argument button in the Function Arguments section to declare an argument for the function.

The new argument is added to the Function Arguments table.-

Edit the Name of this argument in the Function Arguments table.

-

Select whether this argument is Mandatory and whether it is an Array of values.

-

-

Repeat the previous step to declare all the arguments.

-

Use the Move Up and Move Down buttons to order the argument according to your function implementation.

-

Press CTRL+S to save the editor.

-

Close the editor.

| Only declare the Schema if the function is available in this given schema in all the environments (development, test, productions) into which the model will be deployed. If it is not the case, it is recommended not to use a schema name and to create Oracle synonyms to make your function available in all environments from the data location schema. |

| A Mandatory argument cannot follow a non-mandatory one. An Array argument must always the last argument in the list. |

Working with Plug-ins

Plug-ins allow extending the capabilities of the Stambia MDM using Java code and external APIs.

Plug-ins are used to implement enrichers or validations not feasible in

SemQL.

The plug-ins have the following characteristics:

-

All plug-ins take Inputs (mapped on attributes) and Parameters, which may be mandatory (or not).

-

Enricher Plug-ins return Outputs (mapped on attributes to enrich). A subset of these outputs may be used for enriching attributes.

-

Validation Plug-ins return a Boolean value indicating whether or not the values of the input are valid.

Examples of plug-ins:

-

Enricher: Geocoding using an external API (Google, Yahoo, etc.)

-

Validation: Pattern Matching, Validating against an external API, etc.

More information:

-

For more information on using Plug-ins, refer to the Logical Modeling and Integration Process Design chapters.

-

For more information on developing Plug-ins, refer to the Stambia MDM Plug-in Developer’s Guide.

Logical Modeling

Logical modeling allows defining the business entities that make up the model.

Introduction to Logical Modeling

In Stambia MDM, modeling is performed at the logical level. You do not design physical objects such as tables in a model, but logical business entities independently from their implementation.

Logical Model Objects

Logical modeling includes creating the following objects:

-

Customized types such as User-Defined Types, Complex Types and List of Values reused across the model.

-

Entities representing the business objects with their Attributes.

-

References Relationships linking the entities of the model, for example to build hierarchies.

-

Constraints such as Validations, Unique Keys and Mandatory attributes.

-

Display Names, Table Views, Table Views and Business Objects defining how the entities appear to the data stewards and end-users.

In the modeling phase, integration processes objects such as Enrichers, Matchers and Consolidators are also created. Creating these objects is described in the Integration Process Design chapter. After completing the modeling phase, you can create Applications to access the data stored in your model.

Objects Naming Convention

When designing a logical model, it is necessary to enforce a naming

convention to guarantee a good readability of the model and a clean

implementation.

There are several names and labels used in the objects in Stambia MDM:

-

Internal Names (Also called Names) are used mainly by the Model designers and are unique in the model. They can only contain alphanumeric characters, underscores and must start with a letter.

-

Physical Names and Prefixes are used to create the objects in the database corresponding to the logical object. These can only contain uppercase characters and underscores.

-

Labels and Descriptions are visible to the users (end-users and data stewards) consuming data through the UI. These user-friendly labels and descriptions can be fixed at later stages in the design. They are externalized and can be localized (translated) in the platform.

The following tips should be used for naming objects:

-

Use meaningful Internal Names. For example, reference relationships should all be named after the pattern

<entity name><relation verb><entity name>, like CustomerHasAccountManager . -

Do not try to shorten internal names excessively. They may become meaningless. For example, using CustAccMgr instead of CustomerHasAccountManager is not advised .

-

Use the CamelCase for internal names as it enables the use of the Auto fill feature. For example, ContactBelongsToCustomer, GeocodedAddressType.

-

Define team naming conventions that accelerate object type identification. For example, types and list of values can be post-fixed with their type such as GeocodedAddressType, GenderLOV.

-

Define user-friendly Labels and Descriptions. Internal Names are for the model designers, but labels and descriptions are for end users.

Model Validation

A model may be valid or invalid. An invalid model is a model that contains a number of design errors. When a model is invalid, you cannot deploy it, and you cannot close this model edition. Model validation detects errors or missing elements in the model.

To validate the model:

-

In the Model Edition view of the Model Design Perspective, select the model node at the root of the tree. You can alternately select on entity to validate only this entity.

-

Right-click and select Validate.

-

The validation process starts. At the end of the process, the list of issues (errors and warnings) is displayed in the Validation Report view. You can click an error or waning to open the object causing this issue.

| It is recommended to regularly run the validation on the model or on specific entities. Validation may guide you in the process of designing a model. You can perform regular validations to assess how complete the model really is, and you need to pass model validation before deploying or closing a model edition. |

Generating the Model Documentation

When a model is complete or under development, it is possible to generate a documentation set for this model.

This documentation set may contain the following documents:

-

The Logical Model Documentation, which includes a description of the logical model and the rules involved in the integration processes.

-

The Applications Documentation, which includes a description of the applications of the model, their related components (views, business objects, etc.) as well as their workflows.

-

The Physical Model Documentation, which includes a description of the physical tables generated in the data location when the model is deployed. This document is a physical model reference document for the integration developers.

The documentation is generated in HTML format and supports hyperlink navigation within and between documents.

To generate the model documentation:

-

In the Model Edition view of the Model Design Perspective, select the model node at the root of the tree.

-

Right-click and select Export Model Documentation.

-

In the Model Documentation Export dialog, select the documents to generate.

-

Select the appropriate Encoding and Locale for the exported documentation. The local defines the language of the generated documentation.

-

Click OK to download the documentation.

The documentation is exported in a zip file containing the selected documents.

| It is possible to export the model documentation only for a valid model. |

Types

Several types can be used in the Stambia MDM models:

-

Built-in Types are part of the platform. For example: string, integer, etc.

-

List of Values (LOVs) are a user-defined list of string codes and labels. For example: Gender (M:Male, F:Female), VendorStatus (OK:Active, KO:Inactive, HO:Hold).

-

User Defined Types are user restriction on a built-in type. For example the GenericNameType type can be defined as a String(80) and the ZipCodeType can be used as an alias for Decimal (5,0).

-

Complex Types are a customized composite type made of several Definition Attributes using Built-in Type, User-Defined Type or a List of Values. For example, an Address complex type has the following definition attributes: Street Number, Street Name, Zip Code, City Name and Country.

All these type as the user-defined are reused across the entire model.

| A list of values, user-defined or complex type is designed in the model and can be used across the entire model. Changes performed to such a type impact the entities and attributes using this type. To list the attributes using a type and analyze the impact of changing a type, open the editor for this type and then select the Used in item in the left sidebar. |

Built-in Types

Built-in types are provided out of the box in the platform.

Built-in types include:

-

Numeric Types:

-

ByteInteger: 8 bytes signed. Range [–128 to 127]

-

ShortInteger: 16 bytes signed. Range [–32,768 to –32,767]

-

Integer: 32 bytes signed. Range [–2^32 to (2^32-1)]

-

LongInteger: 64 bytes signed. Range [–2^64 to (2^64-1)]

-

Decimal: Number(Precision,Scale), where Precision in [1-38] and Scale in [–84-127]

-

-

Text Types:

-

String: Length smaller than 4000 characters.

-

LongText: No size limit – Translated to a CLOB in the database

-

-

Date Types:

-

Datetime: Date and Time (to the Second)

-

Timestamp: Date and Time (to fractional digits of a Second)

-

-

Binary Types:

-

Binary: Store any type of binary content (image, document, movie, etc.) with no size limit – Translated to a BLOB in the database

-

-

Misc. Types:

-

UUID: 16 bytes Global Unique ID

-

Boolean: 1 character containing either `1' (true) or `0' (false).

-

List of Values

List of Values (LOVs) are a user-defined list of code and label pairs.

They are limited to 1,000 entries and can be imported from a Microsoft

Excel Spreadsheet.

Examples:

-

Gender (M:Male, F:Female)

-

VendorStatus (OK:Active, KO:Inactive, HO:Hold)

| Lists of values are limited to 1,000 entries. If a list of value needs to contain more than 1,000 entries, you should consider implementing in the form of an entity instead. |

To create a list of values:

-

Right-click the List of Values node and select Add List of Values…. The Create New List of Values wizard opens.

-

In the Create New List of Values wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the list of values.

-

Label: User-friendly label in this field. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Length: Length of the code for the LOV.

-

-

Click Finish to close the wizard. The List of Values editor opens.

-

In the Description field, optionally enter a description for the user-defined type.

-

Add values to the list using the following process:

-

In the Values section, click the Add Value button. The Create New LOV Value dialog appears.

-

In this dialog, enter the following values:

-

Code: Code of the LOV value. This code is the value stored in an entity attribute.

-

Label: User-friendly label displayed for a field having this value.

-

Description: Long description of this value.

-

-

Click Finish to close the dialog.

-

-

Repeat the previous operations to add the values. You can select a line in the list of value and click the Delete button to delete this line. Multiple line selection is also possible.

-

Press CTRL+S to save the editor.

-

Close the editor.

List of values can be entered manually as described above and can be translated.

In addition, you can also import values or translated values from a

Microsoft Excel Spreadsheet.

This spreadsheet must contain only one sheet with three columns

containing the Code, Label and Description values. Note that the first

line of the spreadsheet will be ignored in the import process.

To import a list of values from an excel spreadsheet:

-

Open the editor for the list of value.

-

Expand the Values section.

-

In the Values section, click the Import Values button. The Import LOV Values wizard appears.

-

Use the Open button to select a Microsoft Excel spreadsheet.

-

Choose the type of import:

-

Select Import codes, default labels and descriptions to simply import a list of codes, default labels and descriptions.

-

Select Import translated labels and description for the following locale then select a locale from the list to import translated labels and descriptions in a given language. Click the Merge option to only update existing and insert new translations. If you uncheck this box, the entire translation is replaced with the content of the Excel file. Entries not existing in the spreadsheet are removed.

-

-

Click Next . The changes to perform are computed and a report of object changes is displayed.

-

Click Finish to perform the import. The Import LOV wizard closes.

-

Press CTRL+S to save the editor.

-

Close the editor.

User-Defined Types

User-Defined Types (UDTs) are user restriction on a built-in type. They can be used as an alias to a built-in type, restricted to a given length/precision.

Examples:

-

GenericNameType type can be defined as a String(80)

-

ZipCodeType can be used as an alias for Decimal(5,0).

To create a user-defined type:

-

Right-click the User-defined Types node and select Add User-defined Type…. The Create New User-defined Type wizard opens.

-

In the Create New User-defined Type wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the user-defined type

-

Label: User-friendly label in this field. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Built-in Type: Select a type from the list.

-

Length, Precision, Scale. Size for this user defined type. The fields available depend on the built-in type selected. For example a String built-in type will only allow entering a Length.

-

-

Click Finish to close the wizard. The User-defined Type editor opens.

-

In the Description field, optionally enter a description for the user define type.

-

Press CTRL+S to save the editor.

-

Close the editor.

Complex Types

Complex Types are a customized composite type made of several Definition Attributes using Built-in Type, User-Defined Type or a List of Values.

For example, an Address complex type has the following definition attributes: Street Number, Street Name, Zip Code, City Name and Country.

To create a complex type:

-

Right-click the Complex Types node and select Add Complex Type…. The Create New Complex Type wizard opens.

-

In the Create New Complex Type wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

-

Click Finish to close the wizard. The Complex Type editor opens.

-

In the Description field, optionally enter a description for the complex type.

-

Select the Definition Attributes item in the editor sidebar.

-

Repeat the following steps to add definition attributes to this complex type:

-

Select the Add Definition Attribute… button. The Create New Definition Attribute wizard opens.

-

In the Create New Definition Attribute wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Physical Column Name: Name of the physical column containing the values for this attribute. This column name is prefixed with the value of the Physical Prefix specified on the entity complex attribute of this complex type.

-

Type: List of values, built-in or user-defined type of this complex attribute.

-

Length, Precision, Scale. Size for this definition attribute. The fields available depend on the built-in type selected. For example a String built-in type will only allow entering a Length. If a list of values or a user-defined type was selected, these values cannot be changed.

-

Mandatory: Check this box to make this definition attribute mandatory when the complex type is checked for mandatory values.

-

-

Click Finish to close the wizard. The new definition attribute appears in the list. You can double-click the attribute in the list to edit it further and edit its advanced properties (see below).

-

-

Press CTRL+S to save the Complex Type editor.

-

Close the editor.

A complex type has the following advanced properties that impact its behavior:

-

Mandatory: When an entity attribute is checked for mandatory values, and this attribute uses a complex type, each of the definition attributes of this complex type with the mandatory option selected are checked.

-

Searchable: This option defines whether this attribute is used for searching.

-

Translated: Options reserved for a future use.

-

Multi-Valued: This option applies to definition attributes having the type list of values. Checking this box allows the definition attribute to receive several codes in the list of values, separated by the Value Separator provided. For example, a multi-valued Diplomas field can receive the DM, DP, DPM codes meaning that that contact is Doctor of Medicine, Pharmacy and Preventive Medicine.

Entities

Entities are the key components of the logical modeling. They are not database tables, but they represent Business Entities of the domain being implemented in the MDM Project. Example of entities: Customers, Contacts, Parties, etc.

Entity Characteristics

Entities have several key characteristics. They are made of Attributes, have a Matching Behavior and References. They also support Inheritance.

Attributes

Entities have a set of properties, called Attributes. These attributes can be either:

-

Simple Attributes using a built-in types, user-defined types or a list of values created in the model.

-

Complex Attributes using complex types created in the model.

For example, the Contact entity may have the following attributes:

-

FirstName and LastName: Simple attributes using the user-defined type called GenericNameType

-

Comments: Simple attribute using the built-in type LongText.

-

Gender: Simple attributes based on the GenderLov list of values.

-

Address: Complex Attribute using the GeocodedAddress complex type.

Matching Behavior

Each entity has a given a matching behavior. This matching behavior expresses how similar instances (duplicates) of this entity are detected:

-

ID Matching (formerly known as UDPK): Records in entities using ID Matching are matched if they have the same ID. This matching behavior is well suited when there is a true unique identifier for all the applications communicating with the MDM hub and for simple Data Entry use cases.

-

Fuzzy Matching (formerly known as SDPK): Records in entities using Fuzzy Matching are matched using a set of matching rules defined in a Matcher.

| The choice of a Matching Behavior is important. Please take into account the following differentiators when creating an entity. |

ID Matching

-

ID Matching means that all applications in the enterprise share a common ID. It may be a Customer ID, an SSID, etc.. This ID can be used as the unique identifier for the golden records.

-

This ID is stored into a single attribute which will be the golden data Primary Key. If the ID in the information system is composed of several columns, you must concatenate these values into the PK column.

-

As this ID is common to all systems, matching is always be made using this ID.

-

A Matcher can be defined for the entity, for detecting potential duplicates when manually creating records in the hub via a data entry workflow.

Use ID Matching only when there is a true unique identifier for all the applications communicating with the MDM Hub, or for simple data entry use cases.

Fuzzy Matchings

-

Fuzzy Matching means that applications in the enterprise have different IDs, and Stambia MDM needs to generate a unique identifier (Primary Key - PK) for the golden records. This PK can be either a sequence or a Unique ID (UUID).

-

Similar records may exist in the various systems, representing the same master data. These similar records must be matched using fuzzy matching methods that compare their content.

-

A Matcher must be defined in such entity to describe how source records are matched as similar records to be consolidated into golden records.

Use Fuzzy Matching only when you do not have a shared identifier for all systems, or when you want to perform fuzzy matching and consolidation on the source data.

ID Generation

The matching behavior impacts the method used for generating the values

for the Golden Record Primary Key:

-

ID Matching Entities: The Golden Record Primary Key is also the ID that exists in the source systems. It may be generated in the MDM hub only when creating new records in data entry workflows. In this case, the ID may be generated either manually (the user enters it in the form), or automatically using a Sequence or a Universally Unique Identifier generator.

-

Fuzzy Matching Entities: The Golden Record Primary Key is managed and always generated by the system, using a Sequence or a Universally Unique Identifier generator. When creating records in data entry workflows, a Source ID is automatically generated and can be manually modified by the user.

| When generating IDs automatically using a Sequence in data entry forms for an ID Matching entity, you must take into account records pushed by other publishers (using for example a data integration tool). These publishers may use the same IDs for the same entity, and in this case the records will match by ID. If you want to separate records entered manually from other publishers’ records and avoid unexpected matching, configure your sequence using the Start With option to start beyond the range of IDs used by the other publishers. |

References

Entities are related using Reference Relationships. A reference relationship defines a relation between two entities. For example, an Employee is related to a CostCenter by a EmployeeHasCostCenter relation.

Constraints

Data quality rules are created in the design of an entity. These constraints include:

-

Mandatory columns

-

List of Values range check

-

Unique Key

-

Record level Validations.

-

Reference Relationships

These constraints are checked on the source records and the consolidated records as part of the integration process. They can also be checked to enforce data quality in data entry workflows .

Inheritance

Entities can extend other entities (Inheritance). An entity (child) can

be based on another entity (parent).

For example, the PersonParty and OrganizationParty entities inherit

from the Party entity.

They share all the attributes of their parent but have specificities.

When inheritance is used:

-

The child entity inherits the following elements: Attributes, Unique Keys, Validations, Enrichers, References and Display Name.

-

Matchers and Consolidators are not inherited

-

It is not possible to modify the matching behavior. The child inherits from the parent’s behavior.

-

It is possible to add elements to the child entity: Attributes, Unique Keys, Validations, Enrichers and References.

-

The display name defined for the parent entity can be amended in a child entity by appending additional attributes from the child. The separator can be changed.

When using inheritance, the underlying physical tables generated for the child entities and parent entity are the same. They contain a superset of all the attributes in the cluster of entities.

Display Options

Entities also have display options, including:

-

A Display Name defining how the entity is display in compact format.

-

Translations to display the entities information in a client’s locale.

Integration Rules

In addition to the display characteristics, an entity is designed with integration rules describing how master data is created and certified from the source data published by the source applications.

These characteristics are detailed in the Integration Process Design chapter.

Creating an Entity

Creating a New Entity

To create an entity:

-

Right-click the Entities node and select Add Entity…. The Create New Entity wizard opens.

-

In the Create New Entity wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Plural Label: User-friendly label for this entity when referring to several instances. The value for the plural label is automatically generated from the Label value and can be optionally modified.

-

Extends Entity: Select an entity that you want to extend in the context of Inheritance. Leave this field to an empty value if this entity does not extend an existing one.

-

If the entity extends an existing one, the remaining options cannot be changed as they are inherited. Click Finish to close the wizard and then press CTRL+S to save the editor.

-

If you do not use inheritance, proceed to the next step.

-

-

Physical Table Name: This name is used to name the physical table that will be created to store information about this entity. For example, if the physical table is CUSTOMER, then the golden data is stored in a GD_CUSTOMER table.

-

Matching Behavior: Select the matching behavior for this entity. ID Matching or Fuzzy Matching. See Matching Behavior for more information.

-

-

Click the Next button.

-

In the Primary Key Attribute screen, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the primary key attribute.

-

Label: User-friendly label for this primary key attribute.

-

Physical Column Name: This name is used to name the physical column that will be created to store values for this attribute.

-

ID Generation: Select the method for generating the primary key:

-

Sequence: Use this option to generate the ID as a sequential number. You can specify a startup value for the sequence in the Starts With field.

-

UUID: Use this option to generate the ID as a Universally Unique Identifier.

-

Manual: Use this option to type in the ID manually. This option is only possible for ID Matching entities. When this option is selected, you can choose the Type and Length for the primary key attribute.

-

-

-

Click Finish to close the wizard. The Entity editor opens.

-

In the Description field, optionally enter a description for this entity.

-

Press CTRL+S to save the editor.

When an entity is created, it contains no attributes. Simple Attributes and Complex Attributes can be added to the entity now.

| You cannot modify the entity matching behavior, primary key or inheritance from the Entity editor. To change such key properties of the entity after creating it, you must use the Alter Entity option. See Altering an Entity for more information. |

Adding a Simple Attribute

To add a simple attribute:

-

Expand the entity node, right-click the Attributes node and select Add Simple Attribute…. The Create New Simple Attribute wizard opens.

-

In the Create New Simple Attribute wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Physical Column Name: This name is used to name the physical column that will be created to store values for this attribute.

-

Type: Select the type of the attribute. This type can be a built-in type, a user-defined type or a list of values.

-

Length, Precision, Scale. Size for this definition attribute. The fields available depend on the built-in type selected. For example a String built-in type will only allow entering a Length. If a list of values or a user-defined type was selected, these values cannot be changed.

-

Mandatory: Check this box to make this attribute mandatory.

-

Mandatory Validation Scope: This option is available if the Mandatory option was selected. Select whether this attribute should be checked for null values pre and/or post consolidation. For more information, refer to the Integration Process Design chapter.

-

LOV Validation Scope: This option is available if the selected type is a list of values. It defines whether the attribute’s value should be checked against the codes listed in the LOV. For more information, refer to the Integration Process Design chapter.

-

-

Click Finish to close the wizard. The Simple Attribute editor opens.

-

In the Description field, optionally enter a description for the simple attribute.

-

Press CTRL+S to save the editor.

Adding a Complex Attribute

To add a complex attribute:

-

Expand the entity node, right-click the Attributes node and select Add Complex Attribute…. The Create New Complex Attribute wizard opens.

-

In the Create New Complex Attribute wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Physical Prefix: This name is used to prefix the physical column that will be created to store values for this complex attribute. The column name is

<Physical Prefix>_<Definition Attribute Physical Column Name> -

Complex Type: Select the complex type of the attribute.

-

Mandatory Validation Scope: This option is available if the Mandatory option was selected for at least one of the definition attributes of the selected complex type. Select whether the mandatory definition attributes of the complex type should be checked for null values pre and/or post consolidation. For more information, refer to the Integration Process Design chapter.

-

LOV Validation Scope: This option is available if at least one of the definition attributes of the complex type is a list of values. It defines whether the definition attribute’s value should be checked against the codes listed in the LOV. For more information, refer to the Integration Process Design chapter.

-

-

Click Finish to close the wizard. The Complex Attribute editor opens.

-

In the Description field, optionally enter a description for the complex attribute.

-

Press CTRL+S to save the editor.

Working with Attributes

It is possible to edit, order or delete attributes in an entity from the Attributes list in the entity editor.

To order the attributes in an entity:

-

Open the editor for the entity.

-

Select the Attributes item in the sidebar.

-

Select an attribute in the Attributes list and use the Move Up and Move Down buttons to order this attribute in the list.

| With entities inheriting attributes from a parent in the context of Inheritance and have additional (not inherited) attributes, you can perform this ordering from the Inherited Attributes (All) section. Note that it is not possible to order additional attributes before the inherited attributes. |

To delete attributes from an entity:

-

Open the editor for the entity.

-

Select the Attributes item in the sidebar.

-

Use the Delete buttons to remove the attribute from the list.

-

Click OK in the confirmation dialog.

| With entities inheriting attributes from a parent in the context of Inheritance and have additional (not inherited) attributes, you can delete attributes from the Inherited Attributes (All) section. |

| Deleting an inherited attribute on a child entity removes it from the parent entity, and by extension from all the child entities inheriting this attribute from the parent. |

Altering an Entity

Altering an entity allows modifying the key properties of an entity, including its matching behavior, inheritance and primary key attribute.

To alter an entity:

-

Select the entity node, right-click and select Alter Entity. The Modify Entity wizard opens.

-

In the wizard, modify the properties of the entity using the same process used for Creating a New Entity

Reference Relationships

Reference Relationships functionally relate two existing entities. One

of them is the referenced, and one is referencing.

For example:

-

In an EmployeeHasCostCenter reference, the referenced entity is CostCenter, the referencing entity is Employee.

-

In a CostCenterHasParentCostCenter reference, the referenced entity is CostCenter, the referencing entity is also CostCenter. This is a self-reference.

They are used for:

-

Displaying Hierarchies: For example, a hierarchy of cost centers is built as CostCenter has a self-reference called CostCenterHasParentCostCenter.

-

Displaying Lists of Child Entities to navigate the list of entities referring a master entity. For example, a CostCenter can appear with a list of Employee belonging to this cost center according to the EmployeeHasCostCenter relation.

-

Display Links to navigate to the parent entity in a similar relation. The Employee entity will list a link to the CostCenter he belongs to.

-

Referential Integrity. It is enforced as part of the golden data certification process. References are also constraints on entities.

A reference is expressed in the model in the form of a foreign attribute that is added to the referencing attribute.

To create a reference relationship:

-

Right-click the Reference Relationships node in the model and select Add Reference…. The Create New Reference Relationship wizard opens.

-

In the Create New Reference Relationship wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Physical Name:Name of the database indexes created for optimizing access via this reference.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Validation Scope: Select whether the referential integrity should be checked pre and/or post consolidation. For more information, refer to the Integration Process Design chapter.

-

-

In the Referencing [0..*] group, enter the following values:

-

Referencing Entity: Select the entity which references the referenced (parent) entity. For example, in an EmployeeHasCostCenter relation, it is the Employee entity.

-

Referencing Role Name: Name used to refer to this entity from the referenced entity. For example, in an EmployeeHasCostCenter relation, it is the Employees.

-

Referencing Role Label: User-friendly label to refer to one record of this entity from the referenced entity. For example, in an EmployeeHasCostCenter relation, it is the Reporting Employee .

-

Referencing Role Plural Label: User-friendly label to refer to a list of records of this entity from the referenced entity. For example, in an EmployeeHasCostCenter relation, it is the Reporting Employees (plural) .

-

Referencing Navigable: Check this box to display the navigation items to this entity from the parent entity. For example, in an EmployeeHasCostCenter relation, selecting this option will make visible the Reporting Employees node under each CostCenter in the outline, and a list of Reporting Employees in the CostCenter master/detail page.

-

-

In the Referenced [0..1] group, enter the following values:

-

Referenced Entity: Select the entity which is referenced. For example, in an EmployeeHasCostCenter relation, it is the CostCenter entity.

-

Referenced Role Name: Name used to refer to this entity from the referencing entity. For example, in an EmployeeHasCostCenter relation, it is the CostCenter . This name is also the name given to the foreign attribute added to the referencing entity to express this relation.

-

Referenced Role Label: User-friendly label to refer to this entity from the referencing entity. For example, in an EmployeeHasCostCenter relation, it is the Cost Center.

-

Referenced Navigable: Check this box to display the navigation items to this entity from a child entity. For example, in an EmployeeHasCostCenter relation, selecting this option will make visible the link to open a parent CostCenter from an Employee page. Note that this link will show the Display Name defined for the CostCenter entity.

-

Mandatory: Define whether the reference to this entity is mandatory for the child. For example, in an EmployeeHasCostCenter relation, this option must be checked as an employee always belong to a cost center. For a CostCenterHasParentCostCenter reference, the option should not be checked as some cost centers may be at the root of my organization chart.

-

Physical Name: Name of the physical column created for the foreign attribute in the tables representing the referencing entity.

-

-

Click Finish to close the wizard. The Reference Relationship editor opens.

-

In the Description field, optionally enter a description for the Reference Relationship.

-

Press CTRL+S to save the editor.

-

Close the editor.

Data Quality Constraints

Data Quality Constraints include all the rules in the model that enforce a certain level of quality on the entities. These rules include:

-

Mandatory Attributes: An attribute must not have a null value. For example, the Phone attribute in the Customer entity must not be null.

-

References (Mandatory References): An entity with a non-mandatory reference must have a valid referenced entity or a null reference (no referenced entity). For mandatory references, the entity must have a valid reference and does not allow null references.

-

LOV Validation: An attribute with an LOV type must have all its values defined in the LOV. For example, the Gender attribute of the Customer entity is a LOV of type GenderLOV. It must have its values in the following range: [M:Male, F:Female].

-

Unique Keys: A group of column that has a unique value. For example, for a Product entity, the pair ProductFamilyName, ProductName must be unique.

-

Validations: A formula that must be valid on a given record. For example, a Customer entity must have either a valid Email or a valid Address.

The Mandatory Attributes and LOV Validations are designed when creating

the Entities. The references are defined when creating

Reference Relationships.

In this section, the Unique Keys and Validations are described.

Refer to the previous sections of the chapter for the other constraints.

More information:

-

Refer to the Integration Process Design chapter for more information about checking these constraints in the integration process.

-

Refer to the Creating Human Workflows section for more information about enforcing these constraints in the workflows.

Unique Keys

A Unique Key defines a group of attributes should be unique for an entity.

-

Expand the entity node, right-click the Unique Keys node and select Add Unique Key…. The Create New Unique Key wizard opens.

-

In the Create New Unique Key wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Validation Scope: Select whether the unique key should be checked pre and/or post consolidation. For more information, refer to the Integration Process Design chapter.

-

-

Click Next.

-

In the Key Attributes page, select the Available Attributes that you want to add and click the Add >> button to add them to the Key Attributes.

-

Use the Move Up and Move Down buttons to order the selected attributes.

-

Click Finish to close the wizard. The Unique Key editor opens.

-

In the Description field, optionally enter a description for the Unique Key.

-

Press CTRL+S to save the editor.

-

Close the editor.

| In the data certification processes unique keys are checked after the match and consolidation process, on the consolidated (merged) records. Possible unique key violations are not checked on the incoming (source records). |

| Unique keys can be checked in data entry workflows to verify whether records with the same key have been entered previously by a user. This option is available only for entities using ID Matching. Besides, source records submitted to the hub via external loads (not via workflows) are not taken into account in such check. A unicity check enforced in a workflow will behave similarly to the check performed in the data certification process only if the consolidation rule gives precedence to the data submitted by users via workflows over data submitted by applications via external loads, of if data is only submitted via workflows. |

Validations

A record-level validation validates the values of a given entity record against a rule. Several validations may exist on a single entity.

There are two types of validation:

-

SemQL Validations express the validation rule in the SemQL language. These validations are executed in the hub’s database.

-

Plug-in Validations use a Plug-in developed in Java. These validations are executed by Stambia MDM. Controls that cannot be done in within the database (for example that involve calling an external API) can be created using plug-in validations.

SemQL Validation

To create a SemQL validation:

-

Expand the entity node, right-click the Validations node and select Add SemQL Validation…. The Create New SemQL Validation wizard opens.

-

In the Create New SemQL Validation wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Description: optionally enter a description for the SemQL Validation.

-

Condition: Enter the SemQL condition that must be true for a valid record. You can use the

Edit Expression button to open the SemQL Editor .

Edit Expression button to open the SemQL Editor . -

Validation Scope: Select whether the SemQL Validation should be checked pre and/or post consolidation. For more information, refer to the Integration Process Design chapter.

-

-

Click Finish to close the wizard. The SemQL Validation editor opens.

-

Press CTRL+S to save the editor.

-

Close the editor.

Plug-in Validation

| Before using a plug-in validation, make sure the plug-in was added to the platform by the administrator. For more information, refer to the Stambia MDM Administration Guide. |

To create a plug-in validation:

-

Expand the entity node, right-click the Validations node and select Add Plug-in Validation…. The Create New Plug-in Validation wizard opens.

-

In the Create New Plug-in Validation wizard, check the Auto Fill option and then enter the following values:

-

Name: Internal name of the object.

-

Label: User-friendly label for this object. Note that as the Auto Fill box is checked, the Label is automatically filled in. Modifying this label is optional.

-

Plug-in ID: Select the plug-in from the list of plug-ins installed in the platform.

-

Validation Scope: Select whether the Validation should be checked pre and/or post consolidation. For more information, refer to the Integration Process Design chapter.

-

-

Click Finish to close the wizard. The Plug-in Validation editor opens. The Plug-in Params and Plug-in Inputs tables show the parameters and inputs for this plug-in.

-

You can optionally add parameters to the Plug-in Params list:

-

In the Plug-in Params table, click the Define Plug-in Parameters button.

-

In the Parameters dialog, select the Available Parameters that you want to add and click the Add >> button to add them to the Used Parameters.

-

Click Finish to close the dialog.

-

-

Set the values for the parameters:

-

Click the Value column in the Plug-in Params table in front a parameter. The cell becomes editable.

-

Enter the value of the parameter in the cell, and then press Enter.

-

Repeat the previous steps to set the value for the parameters.

-

-

You can optionally add inputs to the Plug-in Inputs list:

-

In the Plug-in Inputs table, click the Define Plug-in Inputs button.

-

In the Input Bindings dialog, select the Available Inputs that you want to add and click the Add >> button to add them to the Used Inputs.

-

Click Finish to close the dialog.

-

-

Set the values for the inputs:

-

Double-Click the Expression column in the Plug-in Inputs table in front an input. The SemQL editor opens.

-

Edit the SemQL expression using the attributes to feed the plug-in input and then click OK to close the SemQL Editor.

-

Repeat the previous steps to set an expression for the inputs.

-

-

Optionally, you can use Advanced Plug-in Configuration properties to optimize and configure the plug-in execution.

-

Press CTRL+S to save the editor.

-

Close the editor.

Diagrams

A Diagram is a graphical representation of a portion of the model or the

entire model.

Using the Diagram, not only you can make a model more readable, but you

can also create entities and references in a graphical manner, and

organize them as graphical shapes.

It is important to understand that a diagram only displays shapes which are graphical representations of the entities and references. These shapes are not the real entities and reference, but graphical artifacts in the diagram:

-

When you double click on a shape from the diagram, you access the actual entity or reference via the shape representing it.

-

It is possible to remove a shape from the diagram without deleting the entity or reference.

-