HBase is shipped with a command line tool called 'importTsv' which can be used to load data from HDFS into HBase tables efficiently.

When massive data need to be loaded into an HBase table, this can be useful to optimize performances and resources.

The Stambia templates offer the possibility to load data from any database into HBase using this tool with little configuration in Metadata and Mapping.

Metadata Configuration

The first step is to prepare the HBase Metadata that will need some information to be able to work with the importTsv command:

- An HDFS temporary folder to store data

- A SSH Metadata that will be used to execute the importTsv command on the remote Hadoop server.

- (Optional) The Kerberos Keytab path on the remote Hadoop server if it is protected by Kerberos

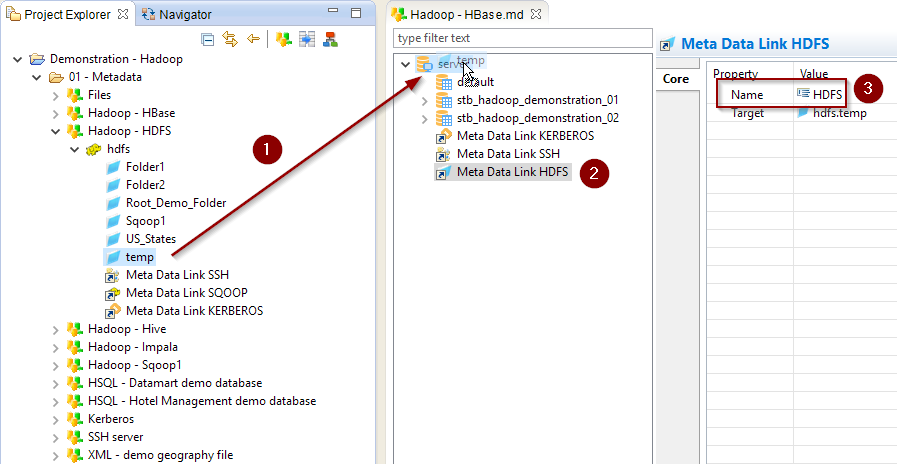

Specifying the HDFS Temporary folder

As the importTsv tool purpose it to load data from HDFS to HBase, we need a temporary HDFS folder to store source data before loading it to the target table.

Simply drag and drop the HDFS folder Metadata link you want to use as temporary folder into the HBase Metadata.

Then, rename it to 'HDFS':

Refer to this dedicated article for further information about HDFS Metadata configuration

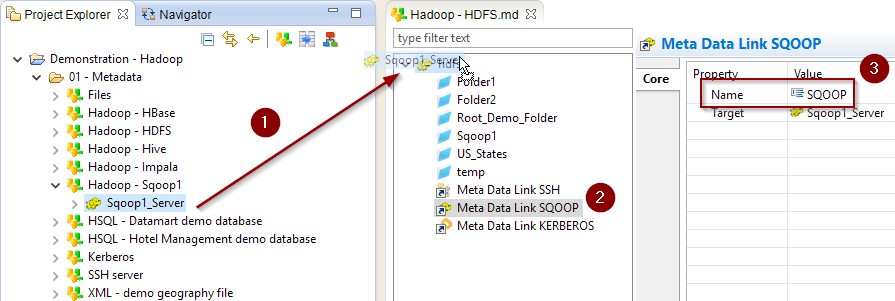

About Sqoop

Temporary data will be sent by default into HDFS using HDFS APIs.

But you also have the possibility to configure it to be sent to HDFS through the Sqoop Hadoop utility instead.

For this drag and drop a Sqoop Metadata Link in the Metadata of the HDFS temporary folder.

Then, rename it to 'SQOOP':

Refer to this dedicated article for further information about Sqoop Metadata configuration

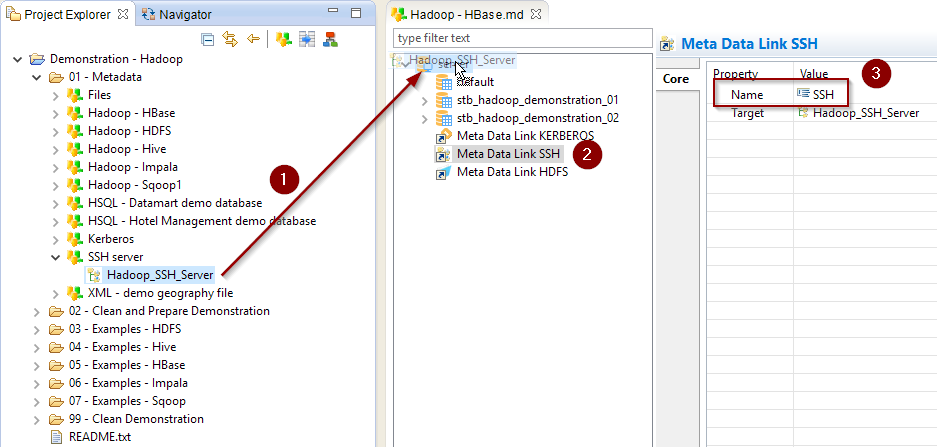

Specifying the remote server information

Specifying SSH connection

The command will be executed through SSH on the remote Hadoop server.

The HBase Metadata therefore requires the information about how to connect to this server.

Simply drag and drop a SSH Metadata Link containing the SSH connection information in the HBase Metadata.

Then, rename it to 'SSH':

Templates only support executing the command through SSH at the moment.

We're working on updating them to add an alternative to also be able to execute it locally to the Runtime if required, without needing an SSH connection.

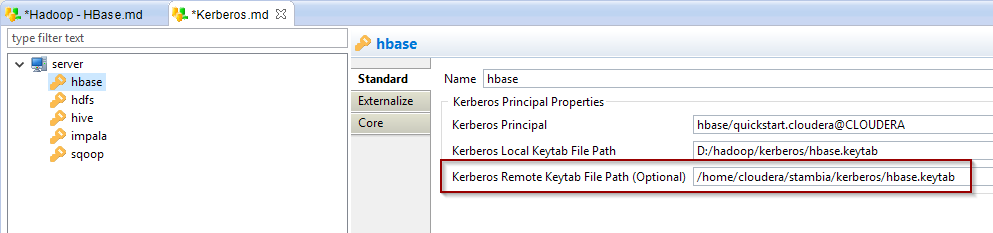

Specifying the Kerberos Keytab path

If the Hadoop cluster is secured with Kerberos, an authentication must be performed on the server before executing the command.

As the command is started through SSH, you need to indicate where is located the Keytab that must be used to authenticate on the remote server.

For this simply specify the 'Kerberos Remote Keytab File Path' in the Kerberos Principal of the HBase Metadata:

- Refer to this dedicated article for further information about the Kerberos Metadata configuration.

- Refer to this dedicated article for further information about using Kerberos with HBase.