As explained in the presentation article, Stambia can work with Google BigQuery powered by Google Cloud Platform (GCP) to perform operations on its tables, load data, reverse Metadata, ...

This article demonstrates how to work with Google BigQuery in Stambia.

This article is dedicated to Stambia DI 2020 (S20.0.0) and higher . If you are on a prior version please refer to this article instead.

Prerequisites:You must have previously installed Google Cloud Platform and Google BigQuery Components to be able to work with Google BigQuery.

Please refer to the following article that will guide you to accomplish this.

Metadata

When Stambia DI Google Cloud Platform Component and BigQuery Components are installed and configured, you can start creating your first Metadata.

This will then allow to perform the first reverses of Metadata and first Mapping Examples.

Metadata creation

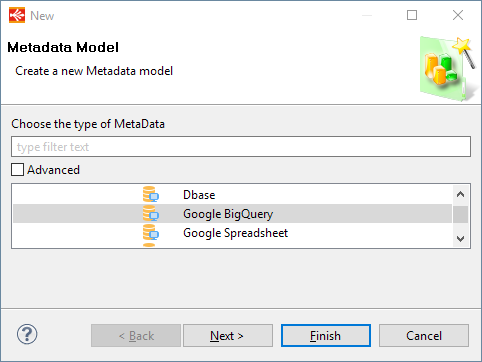

Create the Metadata, as usual, by selecting the technology in the Metadata Creation Wizard:

Click next, choose a name and click on finish.

Metadata Configuration

Google Cloud Credentials definition

When the Metadata is created, you can start defining common information about Google BigQuery access

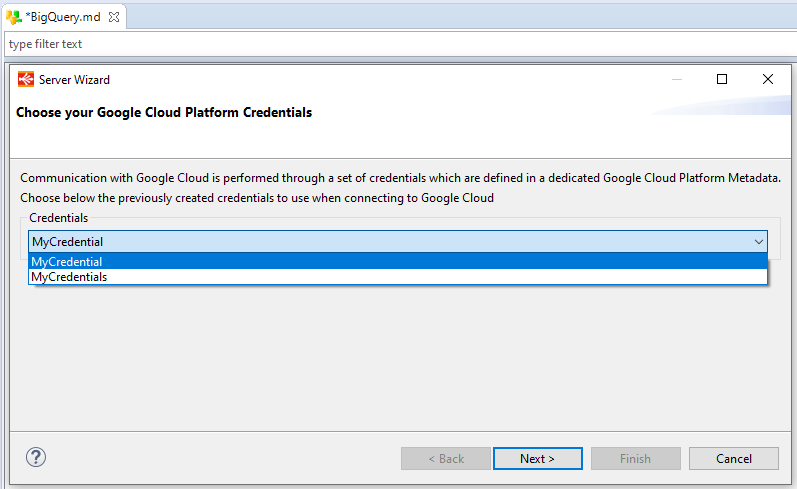

The first step is to define the Google Cloud Credentials that must be used to perform operations on Google BigQuery.

This is mandatory as this will be used to connect to the correct Google Cloud Project and with given credentials.

The BigQuery wizard will launch with the list of all existing credentials existing in the current workspace.

Credentials are defined in Google Cloud Project Metadata which must be created before, they contain login information about Google Cloud Platform Project to perform operations on.

Select one in the list and click on next to continue on the next step.

Connection and Reverse

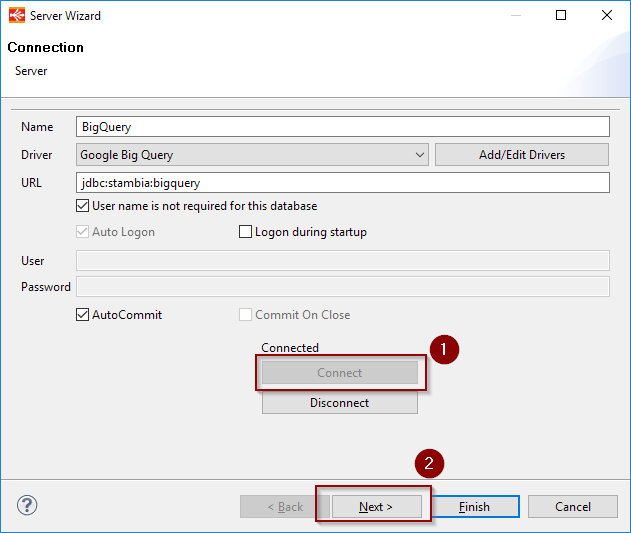

There is nothing else to configure, click on connect, and next to continue to dataset selection.

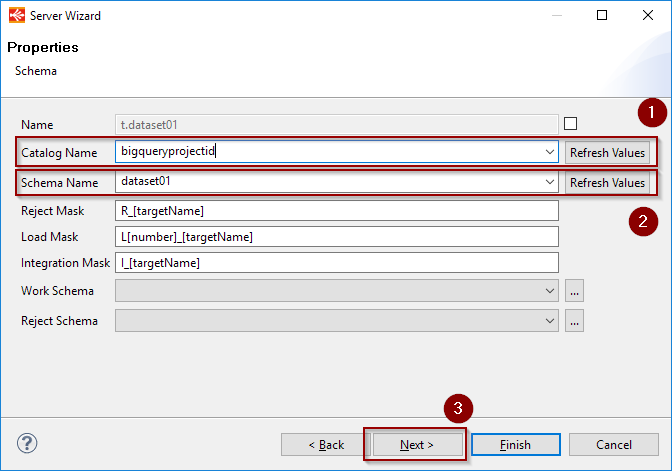

On the next window, click on refresh on the Catalog Name, then select the Google Project from the list.

Click on refresh on the Schema Name and select the Google BigQuery dataset to reverse from the list.

Finally click next, refresh the list of tables, and choose the ones to reverse.

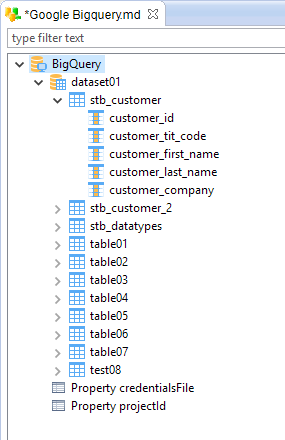

After having clicked on finish the tables will be reversed in the Metadata:

Storage methods

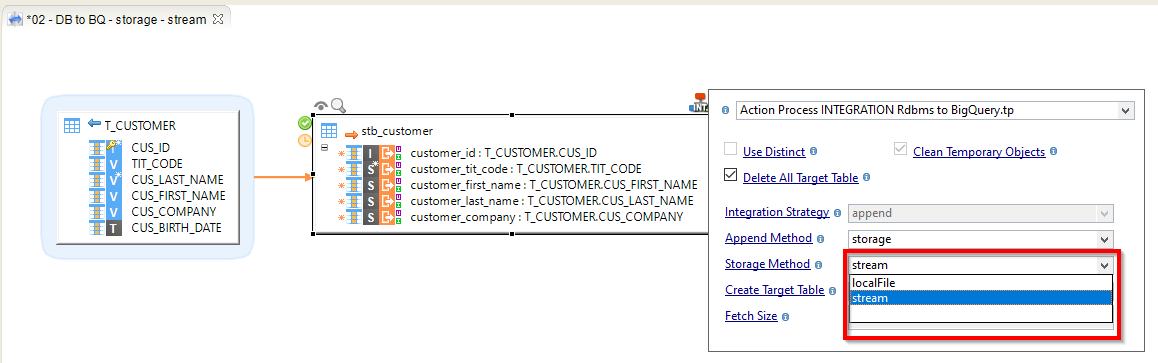

When integrating data into Google BigQuery using the storage method, you have the choice of how it should be done:

- stream: Data is streamed directly in the Google Storage Bucket.

- localfile: Data is first exported to a local temporary file, which is then sent to the defined Google Storage Bucket.

Depending on the amount of data sent and network quality, for instance, one method or the other can have better performances.

Simply, drag and drop the temporary bucket from a Google Cloud Storage metadata node, wich should have been created before :

The storage method is defined on the Template:

You can now start using them in your Mappings and Processes.

A few examples can be found in the next section, and a Demonstration Project is available for more complete examples.

Examples

Below are some examples of usages in Mappings in Processes:

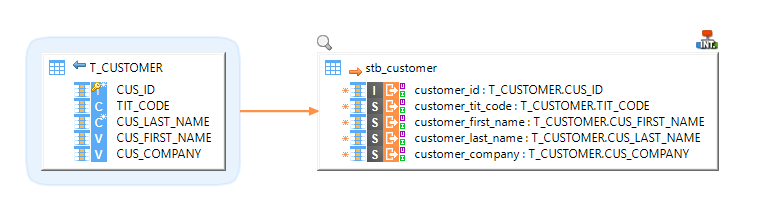

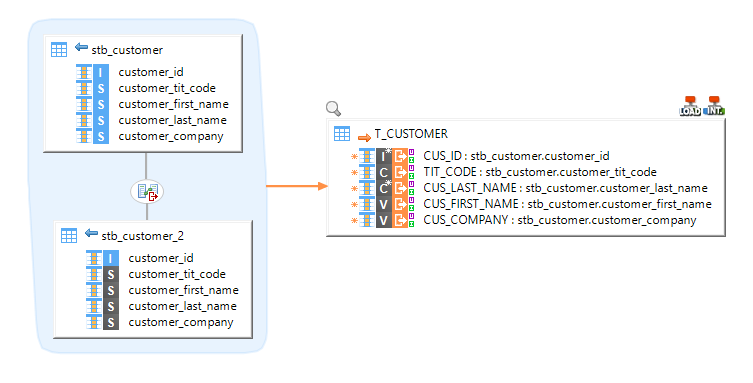

Example of Mapping loading data from an HSQL database to a Google BigQuery table

Example of Mapping loading data from multiple BigQuery tables with joins to an HSQL table

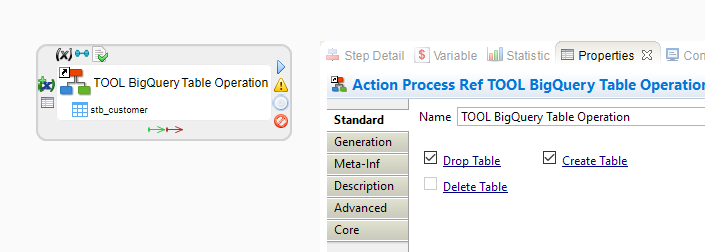

Example of a Process dropping and re-creating a BigQuery table

Demonstration Project

Demonstration projects presenting common and advanced usage of the Google Cloud Platform and Google BigQuery in Stambia DI are available.

You can import them it in your workspace and then have a look at the Mappings and Processes examples.

It is a good start to familiarize with its usage and to see how the Metadata are configured, for instance.

From Stambia DI 2020 and higher, the sample projects are shipped withing the Components, and can be imported directly from the Designer as explained in Components getting started guide.

On Stambia DI S17, S18 and S19, you must download the sample projects separately from the download section.