This article describes the principal changes of Kafka Component.

If you need further information, please consult the full changelog.

Kafka Component download section can be found at this page.

Note:

Stambia DI is a flexible and agile solution. It can be quickly adapted to your needs.

If you have any question, any feature request or any issue, do not hesitate to contact us.

This article is dedicated to Stambia DI 2020 (S20.x.x) or higher.

If you are using Stambia DI S17, S18, S19 please refer to this article.

Component.Kafka.2.0.2

Synchronous sending mode

Kafka component was sending messages asynchronously, without waiting for an answer from the Kafka broker (Fire and Forget).

This was allowing better performances when sending a lot of messages.

However, this avoided to properly compute messages status and statistics.

A new mode has been added to choose how the messages are sent, which allows sending also messages synchronously if wanted.

The new synchronous mode is the default mode used now, it allows to have a better management and follow the status and statistics properly.

Asynchronous mode is still available if you want better performances and you do not need statistics about messages sent.

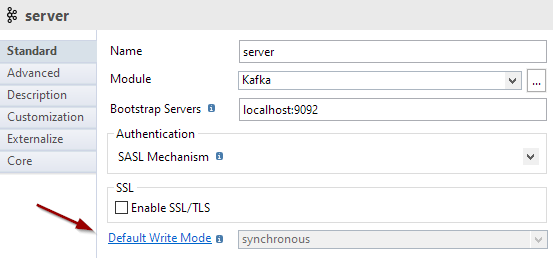

Defining the writing mode in Metadata

A new attribute is available in Metadata to choose the writing mode.

This allows to define a default value for the Mapping and Processes which will use this Metadata.

Note that you can define a default value in Metadata and override it in Mappings easily, as described in the next section.

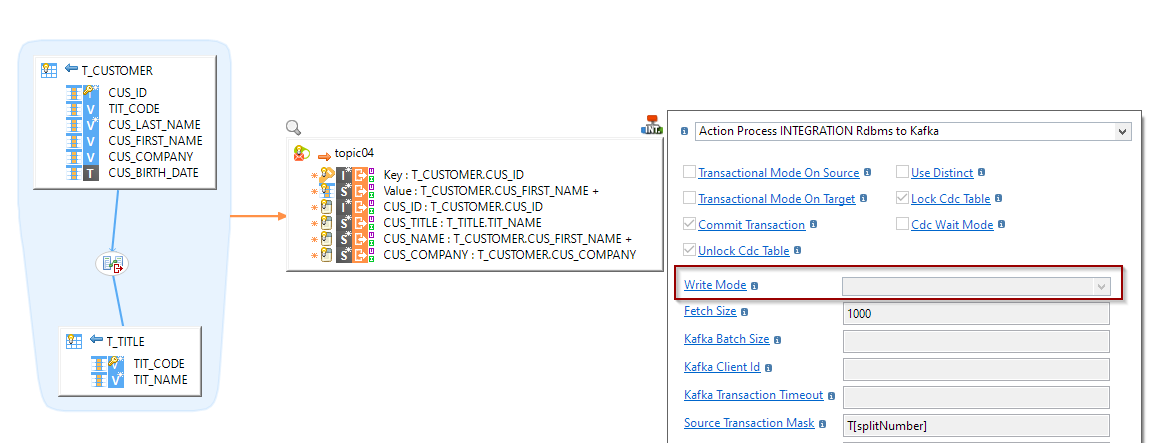

Defining the writing mode in Mapping

Writing mode can be customized also in Mappings, on the Kafka Integration Templates.

The value defined on the Template overrides the default value defined in Metadata.

If no value is defined, the Metadata default value is used.

The new parameter is named "Write Mode" and is available on the Kafka integration Templates.

As a reminder there are two at the moment: "INTEGRATION Rdbms to Kafka" and "INTEGRATION File to Kafka".

Example:

INTEGRATION Kafka To File Template

New Batch Size parameter

Creation of a new parameter "Batch Size" to defines how many messages must be read from the topic before writing to the file.

When reading messages, the messages will be written in the file each "n" messages received, "n" being the batch size.

When you define a large timeout so that the Mapping will read data continuously, make sure to define a proper value for this parameter.

As an example, you can define it to "1" for instance if you want each message to be written in the file as soon as it is read.

Change Data Capture (CDC)

Multiple improvements have been performed to homogenize the usage of Change Data Capture (CDC) in the various Components.

Parameters have been homogenized, so that all Templates should now have the same CDC Parameters, with the same support of features.

Multiple fixes have also been performed to correct CDC issues. Refer to the changelog for the exact list of changes.

Minor improvements and fixed issues

This version also contains some other minor improvements and fixed issues, which can be found in the complete changelog.

Complete changelog

The complete changelog with the list of improvements and fixed issues can be found at the following location.

Component.Kafka.2.0.1

Sample project

The component example project can now be imported directly in the "New" menu of the Project Explorer.